使用方法:

- 修改主控IP地址,编译后在bin文件夹中运行./run执行文件,激活机器人在进入站立后按遥控Start按键进入自动模式

项目简介:

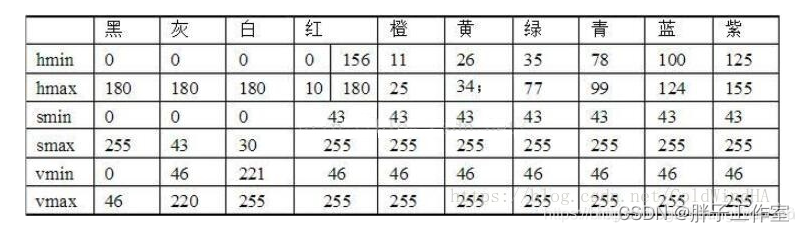

通过OpenCV的HSV颜色通道可以完成对不同颜色小球的识别,典型的颜色通道HSV值可以设置如下,通过调节拖动条可以快速完成对识别颜色的改变:

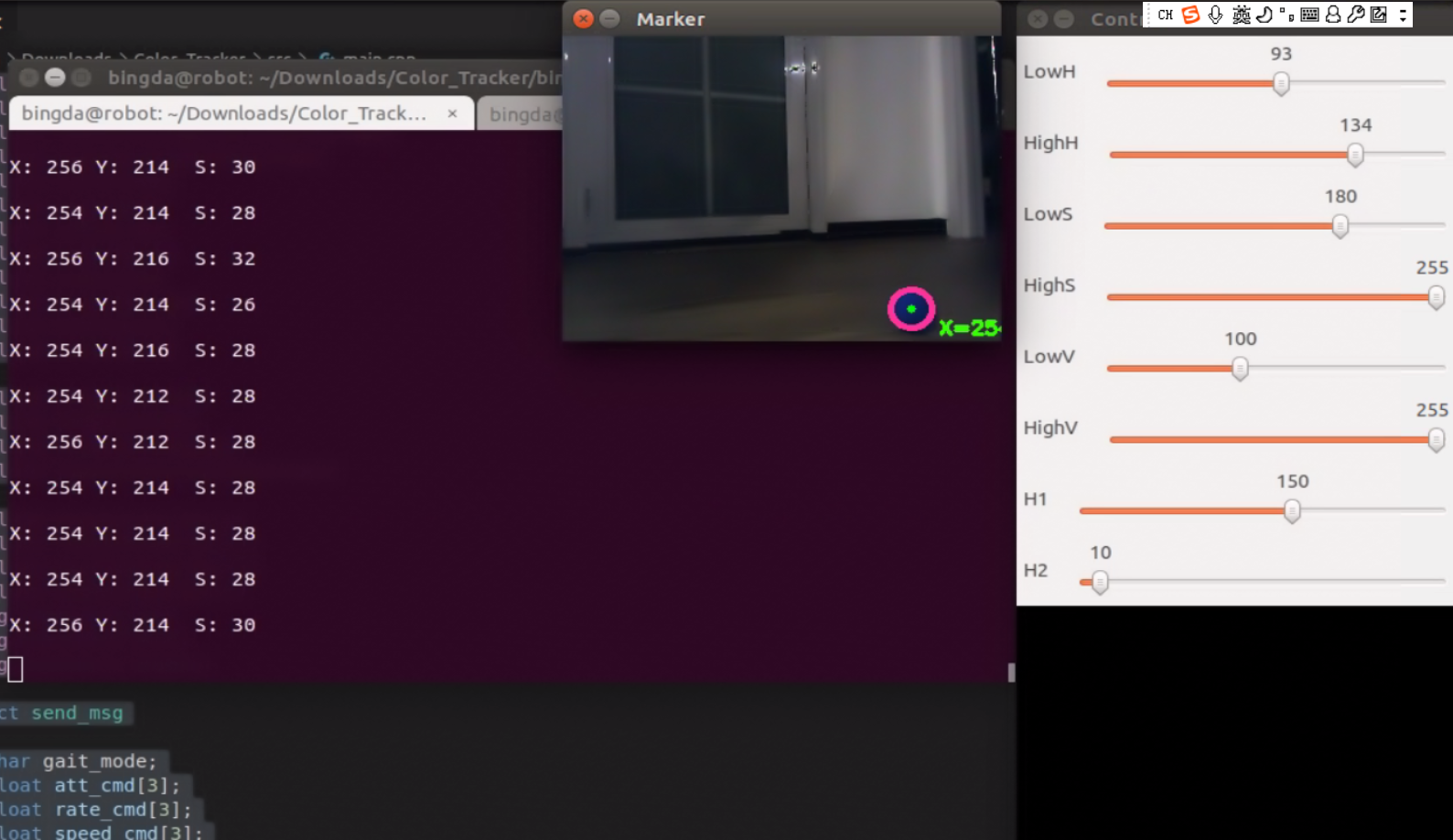

蓝色小球识别结果

则通过识别可以得到不同色块在图像中的位置与尺寸,在识别出色块后进一步采用霍夫变换实现对圆的拟合,提取和圆相近似的色块区域,设置H1和H2参数能剔除不同尺寸的小球,并要求圆形更精确的识别结果:

HoughCircles(contours, circles, CV_HOUGH_GRADIENT, 1, H1, 170, H2, 0, 255);

在识别出小球的中心和尺寸后我们通过下发机器人线速度、转向速度和姿态完成对小球的追踪,下列接口中att0为俯仰轴att1为横滚轴单位为度, rate2为转向速度单位为度每秒,speed0为X速度speed1为Y速度单位为米每秒:

struct send_msg

{

char gait_mode;

float att_cmd[3];

float rate_cmd[3];

float speed_cmd[3];

float pos_cmd[3];

} _send_msg;通过小球尺寸完成对前后速度的控制,我们设置一个期望小球尺寸大小tar_pix,并采用其误差结果构建简单的P控制器。

进一步采用Y轴误差完成对机器人俯仰角度的调节保证机器人一直“看着”小球,同理采用X轴误差完成对转向速度的调节,该控制量会产生一定权重用于产生侧向速度值:

int tar_pix=25;

int err_pix=(tar_pix-Target_Pix.z);

if(err_pix>10)err_pix=10;

if(err_pix<-10)err_pix=-10;

_send_msg.gait_mode=1;

_send_msg.speed_cmd[0]=err_pix*0.022*flt_cmd+(1-flt_cmd)*_send_msg.speed_cmd[0];

err_pix=(160-Target_Pix.x);

if(err_pix>25)err_pix=25;

if(err_pix<-25)err_pix=-25;

if(fabs(err_pix)<3)err_pix=0;

_send_msg.rate_cmd[2]=err_pix*0.003*10*flt_cmd+(1-flt_cmd)*_send_msg.rate_cmd[2];

_send_msg.speed_cmd[1]=err_pix*0.0025*flt_cmd+(1-flt_cmd)*_send_msg.speed_cmd[1];

err_pix=(120-Target_Pix.y);

if(err_pix>8)err_pix=8;

if(err_pix<-8)err_pix=-8;

if(fabs(err_pix)<3)err_pix=0;

_send_msg.att_cmd[0]=err_pix*0.1*10*flt_att+(1-flt_att)*_send_msg.att_cmd[0];为避免识别结果跳动带来的抖动,通过增加滤波平滑识别结果,产生更流畅的跟踪效果,最终将控制指令采用UDP接口基于SDK设定下发到机器人中,最终部署在Tinymal-B上的效果如下,视频中处理器为Jetson Nano,相机为奥比中光Mini深度相机:

如上所述。识别小球实际类似RM比赛中队装甲识别与打击,更复杂的处理可以增加KF滤波器实现对小球位置的预测,增强识别连续性,进一步跟踪小球采用全局位置规划,将所有识别结果均转换为XY物理坐标,在地图上完成跟踪轨迹的规划:

https://blog.csdn.net/weixin_46214675/article/details/115407820

https://zhuanlan.zhihu.com/p/416449365

项目代码:

- 代码如下:

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/highgui/highgui_c.h>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/core/core.hpp>

#include <iostream>

#include <sstream>

#include <stdio.h>

#include <unistd.h>

#include <stdio.h>

#include <sys/time.h>

#include <string.h>

#include <unistd.h>

#include <Eigen/Dense>

#include <Eigen/Core>

#include <Eigen/Geometry>

#include <opencv2/core/eigen.hpp>

#include <sys/types.h>

#include <sys/socket.h>

#include <netinet/in.h>

#include <arpa/inet.h>

using namespace cv;

using namespace std;

using namespace Eigen;

struct send_msg

{

char gait_mode;

float att_cmd[3];

float rate_cmd[3];

float speed_cmd[3];

float pos_cmd[3];

} _send_msg;

Point3f Target_Pix,Target_Pos,Target_Att;

int Target_check_num=0;

int main()

{

VideoCapture cap;

cap.open(-1);

cv::Size InImage_size(320,240);

ostringstream ostr_pos;

ostringstream ostr_att;

int iLowH = 93;

int iHighH = 134;

int iLowS = 180;

int iHighS = 255;

int iLowV = 100;

int iHighV = 255;

int H1 = 150;

int H2 = 10;

namedWindow("Control", CV_WINDOW_AUTOSIZE);

cvCreateTrackbar("LowH", "Control", &iLowH, 179); //Hue (0 - 179)

cvCreateTrackbar("HighH", "Control", &iHighH, 179);

cvCreateTrackbar("LowS", "Control", &iLowS, 255); //Saturation (0 - 255)

cvCreateTrackbar("HighS", "Control", &iHighS, 255);

cvCreateTrackbar("LowV", "Control", &iLowV, 255); //Value (0 - 255)

cvCreateTrackbar("HighV", "Control", &iHighV, 255);

cvCreateTrackbar("H1", "Control", &H1, 255); //Value (0 - 255)

cvCreateTrackbar("H2", "Control", &H2, 255);

//----------------------UDP init----------------------------

int SERV_PORT= 8888;

int sock_fd;

sock_fd = socket(AF_INET, SOCK_DGRAM, 0);

if(sock_fd < 0)

{

exit(1);

}

struct sockaddr_in addr_serv;

int len;

memset(&addr_serv, 0, sizeof(addr_serv));

addr_serv.sin_family = AF_INET;

string UDP_IP="192.168.1.106";

addr_serv.sin_addr.s_addr = inet_addr(UDP_IP.c_str());

addr_serv.sin_port = htons(SERV_PORT);

len = sizeof(addr_serv);

printf("UPD Tinymal-ROS CV Port Open Suess!!");

int recv_num=0,send_num=0;

char send_buf[1024*100]={0},recv_buf[1024*10]={0};

while (1)

{

Mat frame;

cap >> frame;

if(!frame.empty()){

resize(frame, frame, InImage_size);

//flip(frame, frame, -1);

}

else{

cout<<"Camera Fail!!"<<endl;

return 0;

}

float alpha=4,beta=1.0;

for(int i=0;i<frame.rows;i++)

for (int j = 0; j < frame.cols; j++) {

for (int k = 0; k < 3; k++) {

int tmp= (uchar)frame.at<Vec3b>(i, j)[k] * alpha + beta;

if (tmp > 255)

frame.at<Vec3b>(i, j)[k] = 2 * 255 - tmp;

else

frame.at<Vec3b>(i, j)[k] = tmp;

}

}

Target_check_num=0;

Mat imgHSV;

vector<Mat> hsvSplit;

cvtColor(frame, imgHSV, COLOR_BGR2HSV);

split(imgHSV, hsvSplit);

equalizeHist(hsvSplit[2],hsvSplit[2]);

merge(hsvSplit,imgHSV);

Mat imgThresholded;

inRange(imgHSV, Scalar(iLowH, iLowS, iLowV), Scalar(iHighH, iHighS, iHighV), imgThresholded); //Threshold the image

Mat element = getStructuringElement(MORPH_RECT, Size(5, 5));

morphologyEx(imgThresholded, imgThresholded, MORPH_OPEN, element);

morphologyEx(imgThresholded, imgThresholded, MORPH_CLOSE, element);

imshow("Control", imgThresholded); //show the thresholded image

Mat edges;

Mat contours;

vector<Vec3f> circles;

//cvtColor(imgThresholded, edges, CV_BGR2GRAY);

Canny(imgThresholded, contours, 125, 350);

threshold(contours, contours, 128, 255, THRESH_BINARY);

//imshow("contours", contours);

HoughCircles(contours, circles, CV_HOUGH_GRADIENT,

1, H1, 170, H2, 0, 255);

for (size_t i = 0; i < circles.size(); i++)

{

Target_check_num=circles.size();

Point center(cvRound(circles[i][0]), cvRound(circles[i][1]));

int radius = cvRound(circles[i][2]);

Target_Pix.x=center.x;

Target_Pix.y=center.y;

Target_Pix.z=radius*2;

circle(frame, center, 3, Scalar(0, 255, 0), -1, 8, 0);

circle(frame, center, radius, Scalar(155, 50, 255), 3, 8, 0);

cout<<"X: "<<Target_Pix.x<<" Y: "<<Target_Pix.y<<" "<<" S: "<<Target_Pix.z<<endl;

cout<<endl;

}

if(Target_check_num){

ostr_pos.clear();

ostr_pos.str("");

ostr_pos << "X=" << (int)Target_Pix.x << " Y=" << (int)Target_Pix.y<< " Z=" << (int)Target_Pix.z;

putText(frame, ostr_pos.str(), Point(Target_Pix.x+20, Target_Pix.y+20),

CV_FONT_HERSHEY_SIMPLEX, 0.5, CV_RGB(55, 255, 0), 2);

}

imshow("Marker", frame);

//Control

float flt_cmd=0.05;

float flt_att=0.025;

if(Target_check_num==1){

int tar_pix=25;

int err_pix=(tar_pix-Target_Pix.z);

if(err_pix>10)err_pix=10;

if(err_pix<-10)err_pix=-10;

_send_msg.gait_mode=1;

_send_msg.speed_cmd[0]=err_pix*0.022*flt_cmd+(1-flt_cmd)*_send_msg.speed_cmd[0];

err_pix=(160-Target_Pix.x);

if(err_pix>25)err_pix=25;

if(err_pix<-25)err_pix=-25;

if(fabs(err_pix)<3)err_pix=0;

_send_msg.rate_cmd[2]=err_pix*0.003*10*flt_cmd+(1-flt_cmd)*_send_msg.rate_cmd[2];

_send_msg.speed_cmd[1]=err_pix*0.0025*flt_cmd+(1-flt_cmd)*_send_msg.speed_cmd[1];

err_pix=(120-Target_Pix.y);

if(err_pix>8)err_pix=8;

if(err_pix<-8)err_pix=-8;

if(fabs(err_pix)<3)err_pix=0;

_send_msg.att_cmd[0]=err_pix*0.1*10*flt_att+(1-flt_att)*_send_msg.att_cmd[0];

if(_send_msg.att_cmd[0]>10) _send_msg.att_cmd[0]=10;

if(_send_msg.att_cmd[0]<-10) _send_msg.att_cmd[0]=-10;

}else{

_send_msg.speed_cmd[0]=0*flt_cmd+(1-flt_cmd)*_send_msg.speed_cmd[0];

_send_msg.speed_cmd[1]=0*flt_cmd+(1-flt_cmd)*_send_msg.speed_cmd[1];

_send_msg.rate_cmd[2]=0*flt_cmd+(1-flt_cmd)*_send_msg.rate_cmd[2];

_send_msg.att_cmd[0]=0*flt_att+(1-flt_att)*_send_msg.att_cmd[0];

}

//UDP Send

memcpy(send_buf,&_send_msg,sizeof(_send_msg));

send_num = sendto(sock_fd, send_buf, sizeof(_send_msg), MSG_DONTWAIT, (struct sockaddr *)&addr_serv,len);

recv_num = recvfrom(sock_fd, recv_buf, sizeof(recv_buf), MSG_DONTWAIT, (struct sockaddr *)&addr_serv, (socklen_t *)&len);

if(recv_num <= 0)

{

}

else{//接收机器人底盘 里程计等数据

//解码

//memcpy(&_recv_msg,recv_buf,sizeof(_recv_msg));

}

char c = (char)waitKey(20);

if( c == 27 )

break;

}

}

- Cmakelist脚本:

cmake_minimum_required(VERSION 2.6)

SET(PROJECT_NAME run)

PROJECT(${PROJECT_NAME})

set( CMAKE_BUILD_TYPE "Release" )

set( CMAKE_CXX_FLAGS "-std=c++11 -O3" )

set(CMAKE_PREFIX_PATH ${CMAKE_PREFIX_PATH} "/usr/local/share/OpenCV")

set(EXECUTABLE_OUTPUT_PATH ${PROJECT_BINARY_DIR}/../bin)

find_package(OpenCV REQUIRED)

message(STATUS " version: ${OpenCV_VERSION}")

set(ARUCO_PATH /usr/local)

SET(CMAKE_MODULE_PATH ${ARUCO_PATH}/lib/cmake )

include_directories(

${OpenCV_INCLUDE_DIRS}

"./src/include"

"./build"

"/usr/include/eigen3/"

"/usr/local/include"

)

AUX_SOURCE_DIRECTORY(src DIR_SRCS)

ADD_EXECUTABLE(${PROJECT_NAME} ${DIR_SRCS})

TARGET_LINK_LIBRARIES(${PROJECT_NAME} ${OpenCV_LIBS})